Rootless act_runner for Gitea

In this article, I’ll show how to change the act_runner of my Gitea instance from gitea/act_runner to gitea/act_runner:latest-dind-rootless.

Why all this effort? Because act_runner runs in Docker and requires access to the daemon via /var/run/docker.sock as a so-called volume (meaning a disk) or bind mount to function. The owner of this socket is root, which gives the container virtually full access to the host system.

“RULE #1 - Do not expose the Docker daemon socket (even to the containers)” - OWASP / Docker Security Cheat Sheet

This is highly risky, because it’s inherent in the runner’s design to execute job code generated by third parties. It’s an open gateway. In conjunction with direct access to the host system, this could result in a total failure or hostile takeover of my infrastructure in the event of a successful attack.

Danger from docker.sock

In this guide, I follow the OWASP’s recommendation on Docker security and apply its rule(s) in practice. OWASP stands for “Open Web Application Security Project.” It is an organization dedicated to improving the security of web applications and supporting users like me with free articles, documentation, and technology.

The measures described under Rule 1 are:

Leave Docker’s tcp socket disabled

If access to the Docker Daemon is enabled via tcp, it can be connected to via an unsecured connection and without authentication - unless further precautions have been taken. The daemon is then accessible to virtually any internet user and thus vulnerable.

So how do I ensure that the tcp socket is disabled? The instructions for this can be found in the Docker Docs and must be applied in reverse. There, too, there is an explicit warning against opening the tcp socket unprotected.

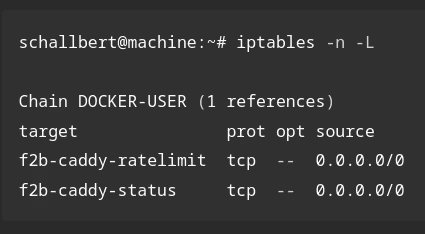

After completing all the necessary steps, I run netstat once. It lists open sockets, network interfaces, and routing tables:

# check if Docker Daemon's "dockerd" tcp socket is exposed

schallbert@machine:~# netstat -lntp | grep dockerd

# test is a pass if this command does not return anything.

I don’t find any entry with dockerd. My server isn’t vulnerable at this point.

Do not include the Docker socket /var/run/docker.sock in other containers

This is where things get a bit more complicated: act_runner needs the socket to create, manage, and ultimately dispose of job containers. Without access to the Docker Daemon via the socket, the build pipeline simply won’t work – unless you want to forgo Docker entirely and run both runner and the build jobs directly on the host machine. This comes with many disadvantages: loss of encapsulation, lack of portability, poor scalability, reduced security…

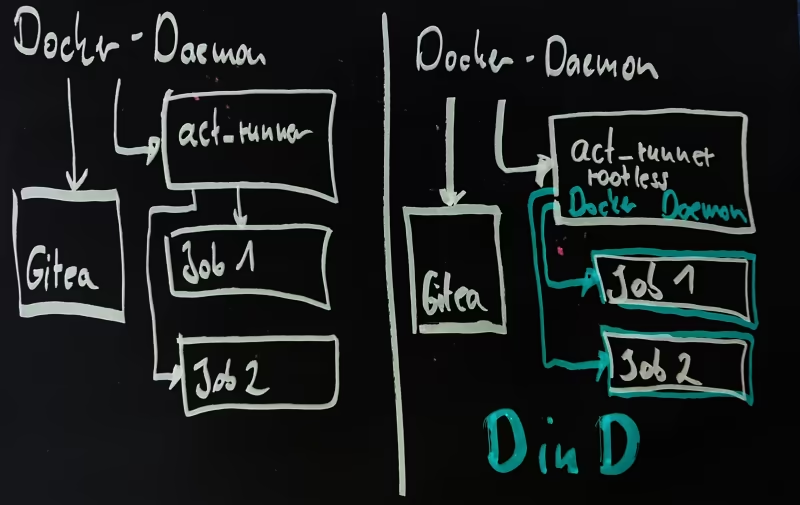

But there’s also a solution for the Docker option: Setting up act_runner Docker-in-Docker. With this setup, act_runner receives its own Docker Daemon, but with limited permissions and without access to the host system. This then takes over the lifecycle of job containers so that they run completely independently of the host system.

Docker in Docker

The following diagram illustrates the difference:

Starting Act_runner “rootless”

So I follow the instructions and copy together a suitable docker-compose.yml. Important here:

privileged: truemust be set. Otherwise, the Docker daemon in the act_runner container cannot start properly because it lacks kernel functions. This causes the entire container to crash repeatedly without generating any helpful error messages.- The environment variable

DOCKER_HOST=unix:///var/run/user/1000/docker.sockmust be set. Here, the Docker socket is controlled by a non-privileged user and is available to the runner for managing job containers. The daemon runs encapsulated in the container and is not connected to the host machine.

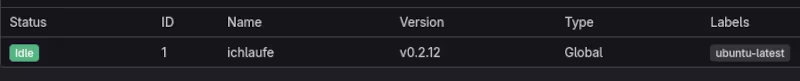

As of Jul-2025: Problem with 0.2.12-dind-rootless

This is where I encountered my first problem. The runner crashes shortly after starting with the following error message:

schallbert@machine:~# docker logs gitea-runner

# [...]

[rootlesskit:parent] error: failed to start the child: fork/exec /proc/self/exe: operation not permitted

Help came from the Gitea community. act_runner runs smoothly with the previous version 0.2.11. However, for reasons unknown to me, it is displayed on Gitea as 0.2.12.

Volume Confusion

Great! Now that the runner is working, I’ll let it run a job right away. Unfortunately, the build fails after just a fraction of a second with this message:

# Runner step: Set up job

failed to start container: Error response from daemon: error while creating mount source path '<volumeSourceFullPath>': mkdir <volumeSourcePath>: permission denied

I had to research this for hours and was under the false impression for a long time that it was due to insufficient permissions on the folders on the host machine. Only later did I truly understand that Docker-in-Docker means exactly what it says: Not only are containers created by containers, but a separate Docker daemon runs within the container!

This means that the classic method of making volumes in job containers available directly from the host system using -v /a/b:/x/y no longer works.

Instead, volumes must now be passed through. Example:

host directory

/opt/server/www/blog-artifacts-> act_runner volume/tmp/blog-artifacts:z–> job container volume/tmp/blog-artifacts

The :z in the act_runner volume is now important. It indicates to Docker that this volume is shared between containers. These volumes must not only be specified in act_runner’s docker-compose.yml, but the job scripts in the .gitea/workflows/ folder must also be adjusted accordingly. However, the :z on the “right side” is not needed here.

“is not a valid volume”

Despite all my efforts, my jobs still aren’t running. This time, because of an error message that already seemed familiar:

# Runner step: Set up job

[/tmp/blog-artifacts] is not a valid volume, will be ignored

So, go into the config.yml of act_runner and add the volume names:

# /gitea/runner/config.yml

# [...]

valid_volumes: ["/tmp/blog-artifacts", "/tmp/lectures-artifacts"]

# [...]

In this file, I can leave privileged: false because, unlike act_runner, the job container doesn’t require kernel features.

Set permissions correctly

Now I’m getting Permission Denied error messages again when running my jobs, although not directly in the first step of the actions. Since I’ve now checked the volume paths down to the last detail, it can only be due to the folder permissions on the host machine.

In order for the artifacts created by the job container to be stored via the volumes on my host, I have to pass the directory to be written and all subfolders -R to the previously defined, non-privileged user ID=1000:

schallbert@machine:~# chown -R 1000:1000 /target/path/to/artifact/

Finally, everything is working smoothly, and I’ve put a stop to the (unlikely, but possible) takeover of my host system by malicious job containers.