Gitea: stop search indexer

After ages, I took a trip to the Google Search Console just for fun. Normally, I’m not interested in it because I don’t use Google myself and assumed that the search engines would do their job well out of self-interest.

I was confused when I realized that the majority of my pages didn’t even make it through the indexer.

The problem

How are people supposed to find my website? Even if they knew the correct search terms, they would hardly see anything on Google. After all, the console clearly stated that none of my blog posts appeared in the indexer. On my Gitea instance, on the other hand, several thousand pages were listed. A clear disproportion. And why does Gitea generate such a high volume of files?

Google’s crawler (a program that searches the web for content and makes it indexable for search) apparently finds every commit, no matter how small, in public repositories as well as the files behind them. Automation runs and other metadata are also indexed.

This is of course completely unnecessary and wastes energy in an area that I would much rather have in the presentation of my blog. So a solution is needed.

Possible solutions

A few possibilities immediately occurred to me:

- Configure the crawler for each repository so that only the top folder level is searched.

- Completely switch off search indexing for

git.schallbert.de. - Use Search Console to make corrections until the indexer shows correct assignments on my blog posts.

The most obvious solution for me was to completely switch off the indexer for my Gitea instance. Correction loops only take effect days or weeks later with Google’s crawler and turned out too time-consuming. I deemed a separate robots.txt for each repository too complicated.

My implementation

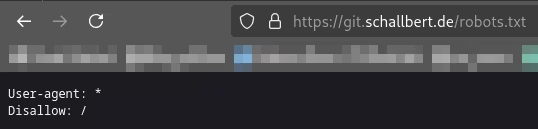

I read the Gitea documentation. The suggestion of a robots.txt made sense to me immediately, as did its contents:

User-agent: *

Disallow: /

This tells crawlers from any party * that nothing should be indexed from the root directory / onwards.

Unfortunately, I had no idea how Gitea makes this file available in its instance:

To make Gitea serve a custom robots.txt (default: empty 404) for top level installations, create a file with path

public/robots.txtin thecustomfolder orCustomPath.

Gitea’s configuration directory

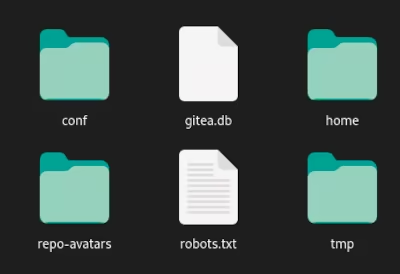

So I experimented a bit. I created a public folder with the corresponding file, then a custom folder at different levels within the Gitea folder structure. Each time I restarted the container and checked whether the robots file also appeared on the server.

Nothing.

Nothing.

But then I got rid of the folder names and simply put the file in the Gitea configuration directory - the lowest level at which gitea no longer appears as a folder name. And this was the solution.

Test

After restarting, I was able to successfully display the file in the browser.

In the meantime, Google is also starting to remove the unwanted pages from the indexer. My blog articles will hopefully also be available in Google search over the next few weeks.

In the meantime, Google is also starting to remove the unwanted pages from the indexer. My blog articles will hopefully also be available in Google search over the next few weeks.