Automatic Upgrades for everything!

As the title says, I would like to have security updates installed as soon as they are released. This applies to the Ubuntu on my cloud server as well as to all applications that I run in Docker. As I’m lazy, I don’t want to carry out these security updates manually.

For function updates and enhancements, however, it is still OK for me to intervene manually from time to time. Let’s start with the system upgrades.

Security updates per unattended-upgrades

Ubuntu supplies - as a short query reveals - a configurable tool for automated upgrades called unattended-upgrades. First, I check whether it is installed on my system:

Installation

schallbert:~# which unattended-upgrades

/usr/bin/unattended-upgrades

Great. Otherwise I’d install it with apt install unattended-upgrades.

configure unattended-upgrades

The Debian Wiki to the rescue: Config files can be found at /etc/apt/apt.conf.d.

First I make sure that automatic upgrades are active. To do that I open 20auto-upgrades and check presence of following lines:

APT::Periodic::Update-Package-Lists "1";

APT::Periodic::Unattended-Upgrade "1";

Very well, so automatic package index updates and -upgrades are active. Now, I should set an update time and upgrade types to install. These can be configured within50unattended-upgrades, mostly by removing commented-out lines.

Upgrade sources

The first section of the configuration file is dedicated to the permitted sources for the updates. Of course, only trustworthy providers should be listed here. My configuration includes updates for my Linux distribution and security plus ESM = “Enhanced Security Maintenance” updates. It looks like this:

Unattended-Upgrade::Allowed-Origins {

"${distro_id}:${distro_codename}";

"${distro_id}:${distro_codename}-security";

"${distro_id}ESMApps:${distro_codename}-apps-security";

"${distro_id}ESM:${distro_codename}-infra-security";

};

Updates for dev releases, too?

I have the value set to auto:

Unattended-Upgrade::DevRelease "auto";

Automatic reboot

Some security updates (e.g. concerning the kernel) require the machine to be restarted. They will therefore only take effect if you have set the corresponding values in the file. WithUsers is a matter of taste, because you are force-logged off if by chance an update has just been installed that requires a restart. To practically rule this out, I have entered a time under Reboot-Time when I am certainly not working with the machine.

Unattended-Upgrade::Automatic-Reboot "true";

Unattended-Upgrade::Automatic-Reboot-WithUsers "true";

Unattended-Upgrade::Automatic-Reboot-Time "03:45";

Of course, automatic reboot has repercussions for all the programs I use. I therefore make sure in my docker-compose.yml files that always or unless-stopped is really noted in restart:.

Notifications

I haven’t switched on automatic mails or other push notifications for the time being, as I’m still in test mode and don’t really want to receive a lot more messages. As soon as I change this, there will definitely be an update here.

Function test

To see if unattended-upgrades does what it is supposed to do, I take a look at the logs under /var/log/unattended-upgrades.

The file unattended-upgrades.log contains the following, for example:

# [...]

2024-01-12 06:17:59,781 INFO Starting unattended upgrades script

2024-01-12 06:17:59,781 INFO Allowed origins are: o=Ubuntu,a=jammy, o=Ubuntu,a=jammy-security, o=UbuntuESMApps,a=jammy-apps-security, o>

2024-01-12 06:17:59,781 INFO Initial blacklist:

2024-01-12 06:17:59,782 INFO Initial whitelist (not strict):

2024-01-12 06:14:33,956 INFO Packages that will be upgraded: libc-bin libc-dev-bin libc-devtools libc6 libc6-dev locales python3-twisted

2024-01-12 06:14:33,956 INFO Writing dpkg log to /var/log/unattended-upgrades/unattended-upgrades-dpkg.log

2024-01-12 06:14:54,578 INFO All upgrades installed

# [...]

However, the file unattended-upgrades-shutdown.log is still empty. I will check at a later date whether everything works here too.

Automate container updates with Watchtower

Now to the updates of my applications. A quick chat with a couple of admins revealed this:

- It is tedious to keep all programs up to date manually

- For containerized applications, there are services that solve this task centrally

- There have already been bad experiences when the latest release is referenced in

docker-compose.yml. - With

image: <application>:latest, not all applications are stable or create problems with dependencies

One of these solutions is offered by Watchtower, which again runs in a container.

Installation

As usual I create a docker-compose.yml in a new folder at opt/watchtower:

# copied from https://containrrr.dev/watchtower/notifications/

# watchtower/docker-compose.yml

version: "3"

services:

watchtower:

image: containrrr/watchtower

restart: unless-stopped

volumes:

- /var/run/docker.sock:/var/run/docker.sock

Watchtower needs docker.sock to pull the updates and apply them to the existing containers.

Apart from that, I have done nothing else for the time being. No notifications (for the reasons mentioned above), no logs, nothing. I hope this setup stays functional and I don’t have to post an update here anytime soon.

Further steps for a validation of Watchtower’s functions, for example, can be found in a tutorial by DigitalOcean.

Procedure

Watchtower checks the installed Docker images for updates on a daily basis according to the documentation. If there is a new image release, Watchtower sends a SIGTERM signal to the containers to be updated, whereupon they shut down (source). The containers are then started up again.

Involuntary functional test

A few days after starting the container, I dialed into my server again and looked through some logs at random. I just wanted to check what was going on.

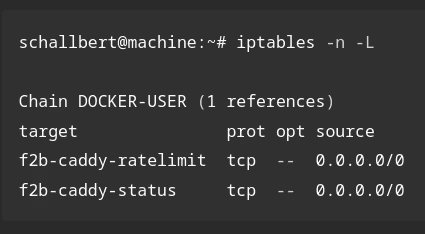

In the fail2ban log files I suddenly saw pages and pages of entries at a certain point in time, 14:42:56. More than I had seen in sum for days before. When I scrolled up to the beginning of this chain of entries, I saw that fail2ban had probably stopped for a moment.

2024-01-26 14:42:50,817 <container_id> INFO Shutdown in progress...

2024-01-26 14:42:50,817 <container_id> INFO Observer stop ... try to end queue 5 seconds

2024-01-26 14:42:50,837 <daemon_id> INFO Observer stopped, 0 events remaining.

2024-01-26 14:42:50,878 <container_id> INFO Stopping all jails

2024-01-26 14:42:50,879 <container_id> INFO Removed logfile: '/var/log/auth.log'

2024-01-26 14:42:50,879 <container_id> INFO Removed logfile: '/var/log/caddy2/gitea_access.log'

2024-01-26 14:42:50,879 <container_id> INFO Removed logfile: '/var/log/caddy2/server_access.log'

2024-01-26 14:42:51,052 <daemon_id> NOTIC [sshd] Flush ticket(s) with iptables

2024-01-26 14:42:51,063 <container_id> INFO Jail 'sshd' stopped

2024-01-26 14:42:51,137 <daemon_id> NOTIC [caddy-status] Flush ticket(s) with iptables-multiport

2024-01-26 14:42:51,137 <container_id> INFO Jail 'caddy-status' stopped

2024-01-26 14:42:51,138 <container_id> INFO Connection to database closed.

2024-01-26 14:42:51,139 <container_id> INFO Exiting Fail2ban

2024-01-26 14:42:56,173 <new_container_id> INFO --------------------------------------------------

2024-01-26 14:42:56,173 <new_container_id> INFO Starting Fail2ban v1.0.2

2024-01-26 14:42:56,173 <new_container_id> INFO Observer start...

[...]

All the log entries at the same time start at the end of the section shown above. A whole bunch of IP addresses are loaded there in preparation to be banned. I looked at the Ubuntu syslog, slightly worried:

Jan 26 14:42:55 schallbert systemd[1]: docker-<ID>.scope: Deactivated successful

Jan 26 14:42:55 schallbert systemd[1]: docker-<ID>.scope: Consumed 21:05 CPU time

Jan 26 14:42:55 schallbert dockerd[690]: time="2024-01-26T14:42:55.228607617Z" level=info msg="ignoring event"

Jan 26 14:42:55 schallbert containerd[639]: time="2024-01-26T14:42:55.229864632Z" level=info msg="shim disconnected"

Jan 26 14:42:55 schallbert containerd[639]: time="2024-01-26T14:42:55.230125409Z" level=warning msg="cleaning up after shim disconnected" i>

Jan 26 14:42:55 schallbert containerd[639]: time="2024-01-26T14:42:55.230145478Z" level=info msg="cleaning up dead shim"

Jan 26 14:42:55 schallbert containerd[639]: time="2024-01-26T14:42:55.240586557Z" level=warning msg="cleanup warnings time=\"2024-01-26T14:>

Jan 26 14:42:55 schallbert dockerd[690]: time="2024-01-26T14:42:55.241839655Z" level=warning msg="ShouldRestart failed

Jan 26 14:42:55 schallbert containerd[639]: time="2024-01-26T14:42:55.870912387Z" level=info msg="loading plugin \"io.containerd.event.v1.p>

Jan 26 14:42:55 schallbert containerd[639]: time="2024-01-26T14:42:55.870997689Z" level=info msg="loading plugin \"io.containerd.internal.v>

Jan 26 14:42:55 schallbert containerd[639]: time="2024-01-26T14:42:55.871007368Z" level=info msg="loading plugin \"io.containerd.ttrpc.v1.t>

Jan 26 14:42:55 schallbert containerd[639]: time="2024-01-26T14:42:55.871150831Z" level=info msg="starting signal loop" namespace=moby path>

Hm, cleaning up dead shim. That sounds ominous. A quick search shows that the shim forms an intermediate layer between the container manager and the container itself and forwards the container’s input and output. Its runtime is linked to that of the container.

In other words, I saw a normal shutdown followed by a restart. Fortunately, no break-in! All that remains is to find out the reason for the restart of Fail2ban. This reminds me that I don’t even know when Watchtower runs the updates…

So I quickly looked in the Watchtower logs with docker container logs <container_id> and behold:

time="2024-01-26T14:42:47Z" level=info msg="Found new lscr.io/linuxserver/fail2ban:latest image (9bda077d765e)"

time="2024-01-26T14:42:50Z" level=info msg="Stopping /fail2ban (e0317a105d53) with SIGTERM"

time="2024-01-26T14:42:55Z" level=info msg="Creating /fail2ban"

time="2024-01-26T14:42:55Z" level=info msg="Session done" Failed=0 Scanned=6 Updated=1 notify=no

Now I have proof: Watchtower does what it is supposed to do. It upgrades containers to a new version as soon as updates become available. Great!

PS: Since I haven’t configured Watchtower very much, I assume from the timestamps in the logs that the updates are run daily at the time Watchtower is started. I wonder whether I should switch to a certain version of the image instead of using latest, though.