Server configuration with Git

What is it about?

- I want version control for the configuration of server applications

- Discussion of technical solutions for implementation

- Tutorial: Exclude Docker/Gitea/other “secrets” from version control

- Tutorial: Create a deployment pipeline

- introduce automation trigger for subsequent rollout of files on the server

- Next article: Integrate automation on the server and install upgrades

Background

I have Watchtower running on my server to automatically keep the installed distributions up to date and freshly patched. Recently I had a case where my Gitea Action Runner would no longer start automatically and even when started manually it gave an error message.

Docker had apparently updated itself without my supervision and was now throwing a volume error when starting up the runner container, which I had never seen before. The error message was clear and could easily be fixed with small changes in a configuration file for Gitea. Nevertheless, I now had the feeling that version control for my configuration would be useful for my future self in order to be able to better understand changes, updates and the reasons behind.

Preliminary considerations

It sounds like a circular reference to me: I record the configuration files for my server in Gitea, which itself runs on my server. This could become interesting with auto-deployment. But more on that later.

Two options spontaneously come to mind for getting version control with automatic synchronization:

1. Hardlink

This solution would store the configuration files scattered across many folders on my server in a folder declared as a repository using a hardlink. Why use a hardlink? Because every service on my server runs encapsulated in itself and has its own configuration files and environment variables stored together with the service. Without hardlinks, I would have to version the entire service folder and make my .gitignore correspondingly complex.

With Git, I would version the files mapped via hardlinks and make their contents available on Gitea in this way.

schallbert@server:/server-config-files–> hardlinks –> repository –> Gitea

So I would get a downstream “version monitoring” with a backup copy in the sense that the files are now lying around multiple times.

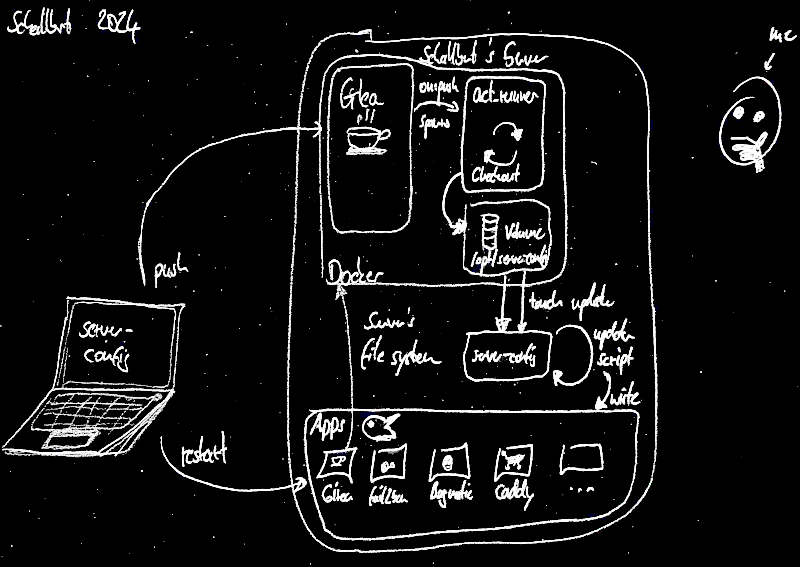

2. Repo and auto-deploy to the server

In this scenario, I keep the configuration files locally on my laptop and can version them with Git as usual. On Gitea, I would map the repository, and at the end of the chain, my runner would have to auto-deploy on the server every time the configuration changes and then restart the affected containers.

schallbert@laptop:/server-config-files–> repository –> Gitea –> act-runner –>server:/<service1...ServiceN>/config-files

I would get the administration “main” on my laptop, and the server would follow the mapping on Gitea.

Problems

In both cases, I don’t have the option of testing changed configurations in advance. Everything I do goes straight to “Prod” and would be live. In the worst case, I can easily mess up my setup.

This doesn’t change the status quo ante, where I updated the files directly on the server. So a new problem only in the sense that I didn’t have a Prod operation when I set it up in the first place and so there was no risk of failure.

Solution two seems to be more complex to implement, because I need a deployment pipeline that rolls out the files in the repository on my server. Especially since the runner is in a Docker container, while the configuration files are located directly in the server’s file system.

Direct access to the server file system is technically possible, but according to my limited understanding it would mean a large attack surface for all my services, whose configuration files could now be directly manipulated via the repository in Gitea.

Selecting my solution

Solution two at least places the immediate live problem on my laptop, so that I don’t have to mess around with the production system in the first step. I think it would be easier to set up an integration environment here with which I can check my configuration changes in advance. Since I have all of my services running in Docker, this could perhaps be solved quite easily.

Let’s get to work

Create a configuration repo

Okay, then the first step is to get the configuration files from the server. To do this, I use the file transfer command scp: scp server:/path/to/source path/to/target about a dozen times until I have caught all the files.

I set up the folder structure in this repo exactly as the files are on the server. I hope that this will make my life a little easier later.

Secrets in docker-compose.yml

But what do I do with “secrets” in the configuration files? Private keys, registration tokens, hashes? I would rather not have them lying around more or less openly in the repository. When using docker-compose it’s quite simple: I can store secret values in hidden files for environment variables and exclude them from Git tracking. In the simplest case, such files are simply called .env and contain a list of environment variables in the style of

BORG_PASSPHRASE="<redacted>"

In the corresponding docker-compose.yml I pull the variable from the .env file as follows:

- BORG_PASSPHRASE=${BORG_PASSPHRASE}

I make these changes locally on my laptop. I use .gitignore to specify using .* so hidden files and thus .env should not be included in the repository. But how do I know whether the containers are still booting correctly?

Secrets in Gitea’s app.ini

With Gitea, I’ve had a much harder time storing secrets in files. The app.ini is also written dynamically by Gitea, so the file looks a little different every time the service is restarted. After a long search, I found

this issue, in which a solution for storing secrets separately was sought and found.

- The only thing that seems sensible to me is storing the values

INTERNAL_TOKENandSECRET_KEYseparately. - The other two properties

LFS_JWT_SECRETandJWT_SECRETare automatically generated anyway and regularly overwritten.

In my configuration I only use INTERNAL_TOKEN. So I will copy it in plain text and without quotes into a hidden file (.INTERNAL_TOKEN) and make it available to the container via a Docker volume:

# Gitea's docker-compose.yml

# [...]

volumes:

- ./.INTERNAL_TOKEN:/run/secrets/INTERNAL_TOKEN:ro

# [...]

In the app.ini it is now important to use the path specified in the compose file:

# Gitea's app.ini

# [...]

server:

INTERNAL_TOKEN = /run/secrets/INTERNAL_TOKEN

# [...]

Not INTERNAL_TOKEN_URI=/run/secrets/INTERNAL_TOKEN as stated in the issue linked above, because this creates an error in V1.22.1 I am currently using: Unsupported URI-Scheme.

A very rough test

To check whether the changed configuration files still work, I install docker and docker-compose on my laptop. Then I try to start the containers.

# docker console log

Error: Network 'caddy-proxy' declared as external, but could not be found.

Oh right, Docker is not yet configured here. So create the network: sudo docker network create caddy-proxy and try again. The download of Gitea and its dependencies begins and the container starts - although not as I had imagined: The folder permissions within Docker are incorrect, meaning that neither Gitea nor Act-runner can access all the required files.

Nevertheless, I find the first error:

# gitea container log

WARNING: The GITEA_RUNNER_REGISTRATION_TOKEN variable is not set. Defaulting to a blank string.

I had forgotten to put the token string in quotation marks.

So for a fully functional integration environment, I have to solve at least two more problems:

Folder permissions for the Main on my laptop must be set up in such a way that the Docker user also has write permissions. A simple solution for now is to append a :Z to the volumes in question and mark them as private unshared.

Now the volume definition in docker-compose.yml looks like this:

# gitea/docker-compose.yml

# [...]

volumes:

- ./gitea:/data:Z

# [...]

I need a second file for environment variables to redirect my services to localhost. At least the service starts this way and I can see the log output. I can already spot most of the configuration errors.

I’m not sure, but I might have additional problems with the caddyserver such as certificate management, proxy settings and so on.

Create a deploy pipeline

Now it would be great if the files uploaded to the Gitea repo (and previously tested locally for functionality) would automatically find their way to my server. For this I could create another Docker volume where act-runner would then put the data stored via on:push trigger. They would then be available on my server.

Setup

If we remember my last attempts to provide artifacts on the server using act_runner, we can use a large part of that for this task as well:

# deploy-to-server.yml

# Workflow for saving the server's config repo to the local disk system

name: Upload-server-config

run-name: $ uploads server configuration files

on:

push:

branches:

- main

jobs:

# Deploy job

build:

runs-on: ubuntu-latest # this is the "label" the runner will use and map to docker target OS

container:

volumes:

# left: where the output will end up on disk, right: volume name inside container

- /opt/server-config:/workspace/schallbert/server-config/tmp

steps:

- name: --- CHECKOUT ---

uses: actions/checkout@v3

with:

path: ./tmp

- name: --- RUN FILE CHANGE TRIGGER ---

run: |

cd ./tmp/automation-hooks-trigger

touch server-config-update.txt

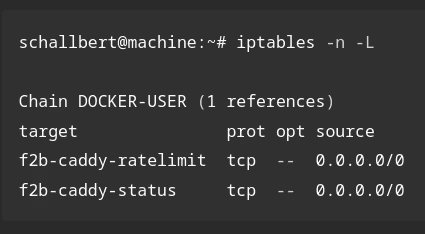

Strange volume errors

But the road to this point was rocky. For a long time I had only specified /server-config under volumes: on the container side and not the working directory of the runner. Then the action runs through and all commands in the #TEST section also work. But when I look on my server, the server-config folder created by Docker remains empty.

I use the following debug code under RUN FILE CHANGE TRIGGER to help me find the path errors:

# deploy-to-server.yml

# [...]

run: |

echo "hello world"

touch updated.txt

pwd

ls -al

I use echo to check whether my code is even being executed in the runner. The touch command puts the current timestamp in the updated.txt file so that I can later use this as a “hook” for further automation. pwd shows me the active path within the runner so that I can correctly map the Docker volume to the server hard drive. ls -al shows me whether the configuration files compiled in the CHECKOUT step were written correctly.

This tells me that the volume path on the “right side” was wrong. I redirect it to the active directory of the runner:

# deploy-to-server.yml

# [...]

# left: where the output will end up on disk, right: volume name inside container

- /opt/server-config:/workspace/schallbert/server-config/

# [...]

Then I got the following to read:

# gitea / act-runner console log

failed to create container: 'Error response from daemon: Duplicate mount point: /workspace/schallbert/server-config'

It seems that the runner automatically creates the “right side” of the mount point itself and therefore cannot be reassigned. Only by adding another path part, in my case /tmp - see above - I fix the error and the long-awaited folder server-config finally appears on my server 😌 with the following content:

schallbert@schallbert-ubuntu-:/opt/server-config# ls -al

total 52

drwxr-xr-x 9 root root 4096 Jul 12 15:38 .

drwxr-xr-x 9 root root 4096 Jul 11 19:56 ..

-rwxr-xr-x 1 root root 395 Jul 11 20:05 boot-after-backup.sh

drwxr-xr-x 3 root root 4096 Jul 11 20:05 borgmatic

drwxr-xr-x 2 root root 4096 Jul 11 20:05 caddy2

drwxr-xr-x 3 root root 4096 Jul 11 20:05 fail2ban

drwxr-xr-x 8 root root 4096 Jul 12 15:38 .git

drwxr-xr-x 3 root root 4096 Jul 11 20:05 .gitea

drwxr-xr-x 4 root root 4096 Jul 11 20:05 gitea

-rw-r--r-- 1 root root 312 Jul 11 20:05 .gitignore

-rw-r--r-- 1 root root 557 Jul 11 20:05 README.md

-rwxr-xr-x 1 root root 371 Jul 11 20:05 shutdown-for-backup.sh

-rw-r--r-- 1 root root 0 Jul 12 15:38 updated.txt

drwxr-xr-x 2 root root 4096 Jul 11 20:05 watchtower

Distributing the configuration on the server

Okay, that’s the first step. I now have a properly configured Git repository that shows my server configuration and can be maintained and at least rudimentarily tested from my laptop. I can also use an automatic Action to store configuration updates on the server and write an update file with a timestamp.

Now the update has to be received on the server, distributed and the affected programs and services have to be restarted. But we’ll look at this in the article Roll out server configuration.