Better security for my server

Motivation

OK. I have my server setup, created an automatic build pipeline for my Blog and my website is displaying the Blog as it should. Still, I’m not completely done yet. Because I want to do more for server-side security than deactivating password logins.

And I don’t have backups. That’s never good, so let’s get something done about that.

Locking away unwanted guests

In my SSH-log of my machine’s root system, I get a lot of failed authentications:

[...]

Jan 11 00:19:29 sshd[93842]: Invalid user admin from 41.207.248.204 port 37194

Jan 11 00:19:29 sshd[93842]: pam_unix(sshd:auth): check pass; user unknown

Jan 11 00:19:29 sshd[93842]: pam_unix(sshd:auth): authentication failure; logname= uid=0 euid=0 tty=ssh ru>

Jan 11 00:19:31 sshd[93842]: Failed password for invalid user admin from 41.207.248.204 port 37194 ssh2

Jan 11 00:19:32 sshd[93842]: Connection closed by invalid user admin 41.207.248.204 port 37194 [preauth]

Jan 11 00:20:14 sshd[93886]: Invalid user svn from 84.108.40.27 port 44968

Jan 11 00:20:14 sshd[93886]: pam_unix(sshd:auth): check pass; user unknown

Jan 11 00:20:14 sshd[93886]: pam_unix(sshd:auth): authentication failure; logname= uid=0 euid=0 tty=ssh ru>

Jan 11 00:20:16 sshd[93886]: Failed password for invalid user svn from 84.108.40.27 port 44968 ssh2

Jan 11 00:20:16 sshd[93886]: Received disconnect from 84.108.40.27 port 44968:11: Bye Bye [preauth]

Jan 11 00:20:16 sshd[93886]: Disconnected from invalid user svn 84.108.40.27 port 44968 [preauth]

[...]

If I didn’t know that this is typical “internet noise”, I’d be nervous. Isn’t it coming close to thieves trying different keys at our front doors every few seconds? So what can we do about it? Ban them.

fail2ban

That’s exactly what fail2ban can do for me.

__ _ _ ___ _

/ _|__ _(_) |_ ) |__ __ _ _ _

| _/ _` | | |/ /| '_ \/ _` | ' \

|_| \__,_|_|_/___|_.__/\__,_|_||_|

v1.1.0.dev1 20??/??/??

In short, fail2ban scans access/auth logs1 for IP-addresses causing multiple failed authentications, and bans them when surpassing a user-defined threshold within a defined period of time.

How does fail2ban work? It modifies iptables, thus accesses packet filter rules (buzzword Firewall) on the network layer. So incoming requests of already blocked IP-addresses will not even reach my applications2.

install fail2ban

fail2ban seems to be close to an industry standard for blocking unwanted access requests on Linux. Every hobbyist admin I know is using it.

For installation and configuration of fail2ban there’s a ton of guides out there plus the (well-written) on inside its Github-repository. I won’t be pressing the point here.

I chose an installation as Docker container to get all dependencies auto-delivered as well. I’m using the distribution by linuxserver and write the following docker-compose.yml to have it deployed:

# /fail2ban/docker-compose.yml

version: "2.1"

services:

fail2ban:

image: lscr.io/linuxserver/fail2ban:latest

container_name: fail2ban

cap_add:

- NET_ADMIN

- NET_RAW

network_mode: host

environment:

- PUID=1000

- PGID=1000

- TZ=Etc/UTC

- VERBOSITY=-vv #optional

volumes:

- ./config:/config

- /var/log/auth.log:/var/log/auth.log:ro # host ssh

- /var/log/caddy2:/var/log/caddy2:ro # gitea via caddy, caddyserver

restart: unless-stopped

What I add to add here: fail2ban requires the Access logs as Volume (above added with :ro as read-only volumes). A lot of filter rules come predefined in its config folder, I just had to add a modified jail.local inspiration: linuxserver/fail2ban-confs and add a rule in filter.d for caddy pulled from muetsch.io to complete my door watch.

fail2ban example

This is how a fail2ban-log looks like for my SSH-Daemon (sshd):

# schallbert:/opt/fail2ban/config/log/fail2ban# grep "220.124.89.47" fail2ban.log

2024-01-11 17:12:20,600 7FBB3630BB38 INFO [sshd] Found 220.124.89.47 - 2024-01-11 17:12:20

2024-01-11 17:12:23,003 7FBB3630BB38 INFO [sshd] Found 220.124.89.47 - 2024-01-11 17:12:22

2024-01-11 17:12:25,205 7FBB3630BB38 INFO [sshd] Found 220.124.89.47 - 2024-01-11 17:12:24

2024-01-11 17:12:27,206 7FBB3630BB38 INFO [sshd] Found 220.124.89.47 - 2024-01-11 17:12:26

2024-01-11 17:12:32,610 7FBB3630BB38 INFO [sshd] Found 220.124.89.47 - 2024-01-11 17:12:32

2024-01-11 17:12:33,045 7FBB36104B38 NOTIC [sshd] Ban 220.124.89.47

kbye!

What does not (yet) work: Gitea & fail2ban

Also on my gitea instance, I get quite some authentication requests that I’d like to block. Unfortunately, I cannot get Gitea to put the registered accesses into a log file. I see them in the console only. On the other hand, the shell access to Gitea is already secured as it is channeled through sshd that also guarantees remote access to my server.

What is missing is a ban for failed web login requests.

I thought I had configured app.ini to get access logs written to file:

# gitea/conf/app.ini

#[...]

[log]

MODE = file

LEVEL = warn

ROOT_PATH = /data/gitea/log

ENABLE_ACCESS_LOGS = true

ENABLE_SSH_LOG = true

logger.access.MODE = access-file

[log.access-file]

MODE = file

ACCESS = file

LEVEL = info

FILE_NAME = access.log

[...]

I have both activated the logs with ENABLE_ACCESS_LOGS and configured log-to-file. access.log is being generated but no authentication tries are being written to it. Maybe caddy’s reverse proxy snitches them away before they reach Gitea? I’m sure I’ll find out at a later point in time.

Update Aug-2024

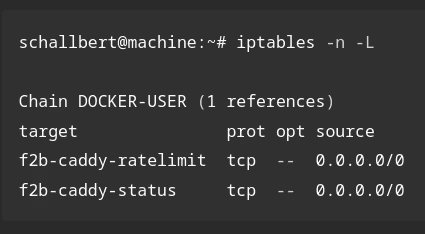

At some point I asked myself whether fail2ban actually works on the iptables of my server or only operates within the container. Luckily, I was not the first with this question (stackoverflow) and found the proposed solution quite charming:

- Block any IP in the Docker container (

docker exec -it fail2ban sh):fail2ban-client set sshd banip 111.111.111.111 - Check the iptables to see if this IP appears there:

iptables -n -L --line-numbers - In my case: enjoy, because it is there:

1 REJECT all -- 111.111.111.111 - Unblock the IP again

fail2ban-client set sshd unbanip 111.111.111.111

Update July 2025

I have now found a solution for Gitea, as well as all my other websites:

- Protection against brute-force attacks via

sshas described above - Protection against overload using a Rate Limiter

- Protection against attacks on the APIs (

404/403attacks) usingcaddy-statusconfiguration for fail2ban, logs inJSON

# /config/fail2ban/filter.d/caddy-status.conf

# this regex works for caddy with json-style logs

failregex = "client_ip":"<HOST>"(.*)"status":(400|401|403|404|500)

datepattern = \d+

ignoreregex =

Regular backups

I want to be able to do disaster recovery. Unforeseen events impacting my server like incompatible updates, machine downtime or security breaches should not keep me from spawning and configuring a new, healthy instance in no time.

I’m not willing to create backups manually, copy and compress folders, finally downloading to a backup location via SCP / SFTP. This should take place automatically, and, ideally, without cost. A quick search reveals two suitable open-source tools: borgmatic and restic.

I randomly chose Borgmatic.

install Borgmatic with Docker

As for all other components so far, I want Borgmatic to run in Docker environment. Luckily there are ready-made solutions that need just a little configuration.

This is how my docker-compose file looks like:

# /borgmatic/docker-compose.yml

version: '3'

services:

borgmatic:

image: ghcr.io/borgmatic-collective/borgmatic

container_name: borgmatic

volumes:

- ${VOLUME_SOURCE}:/mnt/source:ro # backup source

- ${VOLUME_TARGET}:/mnt/repository # backup target

- ${VOLUME_ETC_BORGMATIC}:/etc/borgmatic.d/ # borgmatic config file(s) + crontab.txt

- ${VOLUME_BORG_CONFIG}:/root/.config/borg # config and keyfiles

- ${VOLUME_SSH}:/root/.ssh # ssh key for remote repositories

- ${VOLUME_BORG_CACHE}:/root/.cache/borg # checksums used for deduplication

# - /var/run/docker.sock:/var/run/docker.sock # add docker sock so borgmatic can start/stop containers to be backupped

environment:

- TZ=${TZ}

- BORG_PASSPHRASE=${BORG_PASSPHRASE}

restart: always

Inspirations were the related docs on Github. You can see that Borgmatic requires a lot of volumes to work properly, most importantly backup source and target location.

configure Borgmatic

All concrete data behind ${} are summarized in an .env environment variable file. This helps me avoid publishing passphrases by accident. Of course I have noted down the passphrase on paper as “backup” of the backup.

I then modify config.yml in borgmatic.d/ slightly, adding backup source, target, count, and cycle. That’s all, I’m ready for a first test.

testing Borgmatic backup

docker exec borgmatic bash -c \

"cd && borgmatic --stats -v 1 --files 2>&1"

This command has borgmatic create a backup for me through the Docker container.

I got an error message right away, saying repository does not exist. A look into the docs reveals that I have to manually create the backup target repository.

The following command does this, creating an empty, encrypted repo:

docker exec borgmatic bash -c \

"borgmatic init --encryption repokey-blake2"

Now I retry manual backup creation which throws no error .

Ein Backup only is a Backup…

…when it is successfully applied, a friend of mine said.

Right he is. Still, I don’t dare replacing my working server configuration with a backup. As a compromise, I go halfway by creating a docker-compose.restore.yml following the online guide. For additional help, I read modem7’s related blog post.

For my test purposes, I run docker-compose.restore.yml in the container’s shell and enter the following commands:

mkdir backuprestoremount

borg mount /mnt/repository /backuprestoremount

mkdir backuprestore

borgmatic extract --archive latest --destination /backuprestore

Here, I create the folder /backuprestoremount and have it point to my borg backup. Then, I extract the backup into /backuprestore.

Let’s do a sanity check:

# cd /mnt/backuprestore

# /restore/mnt/source ls

borgmatic caddy2 containerd fail2ban gitea hostedtoolcache watchtower

Yeah! The backup contains all applications along with their files. I stop at this point as I’m too lazy and cowardly to overwrite my working setup as mentioned above.

What (yet) doesn’t work: Borgmatic & docker-compose down

Borgmatic offers a simple method to run pre- and post-backup actions. As I want to maintain consistency within my containers, I want them to be shutdown before and restarted after the backup run. So I wrote a script, hooked it into the config and… nothing.

Easy to follow, this can only work directly if Borgmatic ran on bare metal. Docker’s encapsulation doesn’t let borgmatic run shell scripts outside its container. I thought I could circumvent this problem by including var/run/docker.sock in the docker-compose file but without success.

I’m sure this can be solved somehow. For the time being, I have more important problems to solve and thus I’ll live with the assumption “there are no data inconsistencies3.

-

The SSH-Daemon of my server puts its authentication logs to

/var/log/auth.log, but could also be namedaccess.logor similar in other applications. Services like caddy or gitea also create auth log files. ↩ -

However, what I yet do not understand is how

fail2banis able to access the iptables from within the container. I thought a positive side effect of containerization is encapsulation. Or is that only complete with “rootless”-Containers? ↩ -

Background: I’m currently the only one contributing to my

giteainstance. My website is static. The webservercaddyonly handles changing files once pushed to Gitea. I have scheduled application updates perwatchtowerandunattended-upgrades(see my follow-up post) at night so they won’t interfere withborgmaticruns. Just the logs forfail2banare still written independently. I accept the risk of data loss here because packet filtering is subject to change anyways. ↩